Building The Hotdog/Not-Hotdog Classifier From HBO's Silicon Valley

My experience building the Hotdog/Not-Hotdog app created by Jian-Yang in HBO's Silicon Valley

Ever since I watched the Hotdog/Not-Hotdog app created by the wild-card app developer Jian-Yang in HBO’s Silicon Valley, I have wanted to create it. And now finally, I have! But due to my lack of app development skills, I created only the machine learning classifier that the app would use. Still, creating the brain of the app is pretty cool. Also, it’s a pretty cool Data Science project. So, here’s my experience.

Note: Find the Jupyter Notebooks here.

“It’s not magic. It’s talent and sweat.” — Gilfoyle

Can’t Learn Without Data

The first step of any data science or machine learning or deep learning or Artificial Intelligence project is getting the data. You can create the most sophisticated algorithms and run your ML models on the craziest GPUs or TPUs, but if your data is not good enough then you won’t be able to make any progress on your ML task. So, the first was to get the data.

ImageNet to our Rescue!

I used the ImageNet website to get the data. For the hotdogs, I searched for ‘hotdog’, ‘frankfurter’ and ‘chili-dog’. And for the ‘Not Hotdog’ part, I searched for ‘pets’, ‘furniture’, ‘people’ and ‘food’. The reason for choosing these was that these are the images people are most likely to take while using such an app. I used a small script to download all these images from ImageNet and delete the invalid/broken images. Then, I went through all the images and manually deleted the broken and irrelevant images. Finally, I had 1822 images of hotdogs and 1822 images of “not hotdogs”.

Note: I had over 3000 images of “not hotdogs” but I randomly chose 1822 out of them in order to balance both the classes.

Now, I had all the data that I needed to create this classifier.

Make Data Better

The next step was to make the data better. First, I wrote a small script to resize all the images to 299x299 resolution so that all the images are of the same size and I wouldn’t have to hit various incompatibility problems while loading these images.

It is also important to structure the data properly in the directories. I structured the data in the following way:

Dataset —> (train —> (hotdog, nothotdog), test —> (hotdog, nothotdog), valid —> (hotdog, nothotdog))

The reason for structuring the dataset this way is because Keras requires the data to be this way when we load it in Keras.

First Iteration — Creating a Basic ConV Model

In the first iteration, I created a simple Convolutional Model with two ConV layers and three fully-connected layers. Instead of loading the entire image dataset into the memory(numpy array), I used Keras generators to load the images during runtime in batches. I also used the ImageDataGenerator Class in keras to augment the data in runtime in batches.

This model took about 50 minutes to train with 50 epochs and gave an accuracy of 71.09% on the test set.

This was a pretty low accuracy for such a simple task. I mean, human accuracy for this task has to be ~100%. This got me thinking and I decided to take it up a notch for iteration #2.

Second Iteration — Transfer Learning

I decided to use Transfer Learning to make this classifier better and faster to train. Now, I couldn’t augment the data in real time because transfer learning doesn’t support that in Keras. So, I wrote a script to augment the data and create a better dataset. I applied the following transformations:

- Flip Horizontally

- Flip Vertically

- Rotate images at a certain angle

- Shear and Zoom images

This data augmentation led to dataset version 2.0 which had 7822 hotdog images and 7822 “not hotdog” images. This was a much larger dataset so I expected some increase in the accuracy of the classifier. Now, it is much better to actually get new data instead of applying data augmentation, as taught by Andrew Ng in Deeplearning.ai course, but since the former wasn’t a choice, data augmentation can make at least some improvement in the classifier. Now that I had a relatively larger and better dataset, it was time for INCEPTION!

No, not the one by Christopher Nolan.

I decided to use the InceptionNet V3 because it provides better results than VGGNet and ResNet50. Keras provides an Application module for using these pre-trained architectures quite easily. So, I downloaded the pre-trained InceptionV3 model and weights and then trained the dataset on this model to get the bottleneck features of the dataset. This is done by basically removing the fully-connected layers from the InceptionNet and training the data only on the ConV layers. This process converts our raw images into vectors.

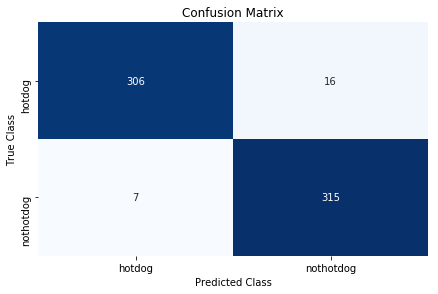

Now we create a simple ConV model(the same in iteration #1) and train it on these bottleneck features. This model took about 5 minutes to train and gave an accuracy of 96.42% on the test set. That’s a pretty good accuracy for such less data. Since accuracy is not always the best metric to measure a model’s performance, I created a confusion matrix.

Looking at the images that were incorrectly classified, many of them that the classifier incorrectly classified as “not hotdog” didn’t really have a good view of the hotdog. Or had more than just a hotdog. Although, why it classified a group of people as a hotdog is a mystery I wish to solve one day!

Wait, what did we just do?

- We took an idea from a TV show and decided to go through the entire ML process using that idea.

- First, we collected the data because that’s what powers our ML models. In this case, we got images of hotdogs and some other things that aren’t hotdogs.

- We did some data preprocessing and removed broken/useless images and resized all images to the same size.

- We created a simple ConV model and used real-time data augmentation. The results we got didn’t impress us much. So, in the pursuit of happyness and better accuracy, we decided to go a step further.

- We used transfer learning to get better results. From the roster of pre-trained networks, we chose the InceptionV3 network and got the bottleneck features.

- We trained a network similar to the first network on these bottleneck features and got a much better accuracy.

- We plotted some metrics like loss, accuracy and confusion matrix because graphs are cool and useful.

- We sit back and smile at our recent accomplishment and start thinking about what to build next.

So that’s it. I created a pretty good classifier to tell me if something is a hotdog or not. And as Jian-Yang believed, this is the next billion-dollar idea!

“It’s intoxicating. Don’t act like it’s not magical. It is!” — Jared