Activation Functions Demystified

An introduction to activation functions used in neural networks.

With great deep learning resources, comes great deep learning jargon.

If you’ve been studying deep learning for a while, you must have come across a lot of jargon associated with the field. And for beginners, it can feel pretty overwhelming. So, if you’ve come across the term “activation functions” and find yourself confused, don’t worry. I’ve got you covered.

Before we start talking about activation functions, let us first understand what exactly an activation is.

So what is an activation?

An activation is just a number. That’s all an activation is. As we know, the core of deep learning is the following equation:> y = W * x + b

Once we calculate this equation, the value that y holds is called an activation. And so, every node in the neural network that calculates this equation, holds its own value of y and all those values are called activations. That is all there is to an activation. No magic. No complicated explanation. No hidden concepts. It’s simply a number.

Alright, guy on the Internet. I get it. But what is an activation function then?

An activation function is simply a mathematical function that is applied to an activation in order to introduce some non-linearity in our network. Confused? Read on.

A neural network is basically a combination of multiple linear and non-linear functions. The y = m * x + b is a linear function. But, if we only had linear functions in our network in all layers, then the network would simply act as a single layer network. Such a network would not be able to learn much.

That is why we use activation functions for producing the output of every node in every layer. This introduces non-linearity in our network which enables the network to learn.

Such a combination of linear and non-linear functions makes a neural network capable of approximating anything.

Are there different activation functions that I can use?

There are many different types of activation functions that have been used through the ages. But instead of going through all of them in this article, I will explain the 4 most popular and useful activation functions that are currently used in research as well as in the industry.

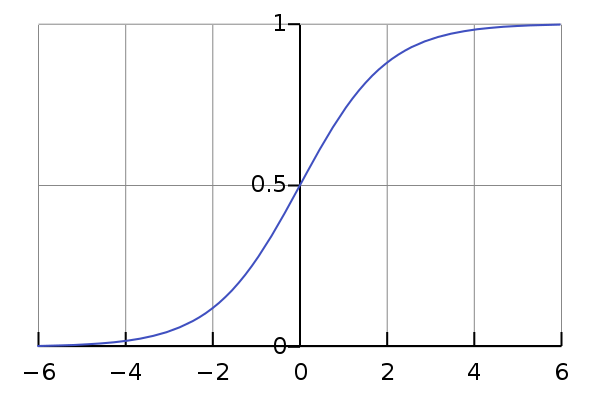

Sigmoid(): A sigmoid activation function turns an activation into a value between 0 and 1. It is useful for binary classification problems and is mostly used in the final output layer of such problems. Also, sigmoid activation leads to slow gradient descent because the slope is small for high and low values. A sigmoid activation is represented mathematically as the following equation:> Sigmoid(z) = 1 / (1 + exp(-z))

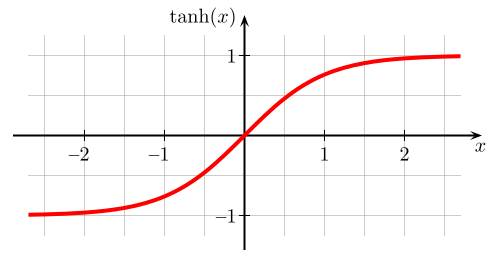

Tanh(): A Tanh activation function turns an activation into a value between -1 and +1. This activation function is better than the sigmoid activation function in most cases because the outputs are normalized. It is a really popular activation function that is used in the hidden layers. Mathematically, it is represented as:> tanh(z) = [exp(z) - exp(-z) ]/[exp(z) + exp(-z)]

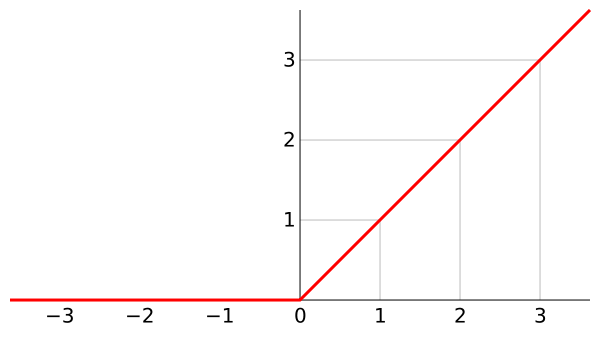

ReLU(): A ReLU (Rectified Linear Unit) is a really complex and fancy sounding activation function, but all it does is simply turn negative values to zero. That’s all it does. It is the most popular and effective activation function and is used in the hidden layers. It is the most used activation function. And is the default choice for most neural network layers. Mathematically, it is represented as:> ReLU(z) = max(0,z)

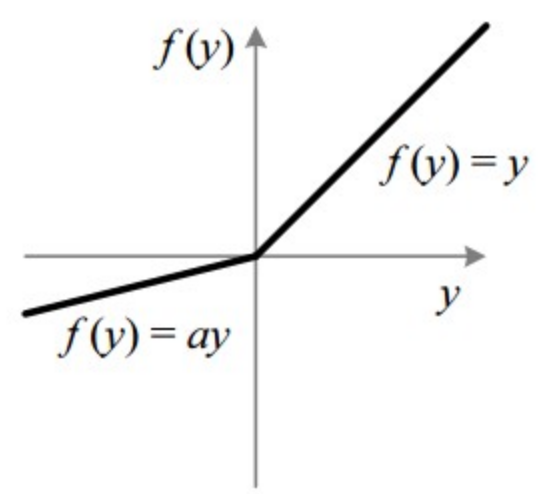

Leaky ReLU: One problem with ReLU may be that the derivative(slope) for negative values is zero. In some cases, we may not want that. In order to combat that problem, we can use a leaky ReLU. A leaky ReLU makes sure that the slope for negative values is not zero. But mostly, ReLU function will just do fine. Mathematically, it is represented as:> Leaky_ReLU(z) = max(0.01*z, z)

The advantage of ReLU and Leaky ReLU is that the derivative (slope) is much greater than zero (for z > 0), and hence, the algorithm will learn much faster as compared to sigmoid or tanh.

So, these are the activation functions that are used everywhere from research to industry.

But hey, how do I know which one to use?

So, the rules of thumb for choosing activation functions are pretty simple:1. If you need to choose a value between 0 and 1, like in binary classification, then use a Sigmoid activation function. Otherwise, for all other cases, don’t use this.2. ReLU is the default choice for all the other cases. But in some cases, you can use tanh as well. Try playing with both, if you are not sure which one to use.

That’s it about activation functions. They are used to make the network non-linear and you’ve got a few choices as to which activation function you can use. I hope I have successfully demystified activation functions for you and you understand them a bit better now.